Project details

- Funder: Defense Advanced Research Projects Agency (DARPA)

- Program: Reverse Engineering of Deceptions (RED)

- Year of activity: 2020 - 2024

Abstract

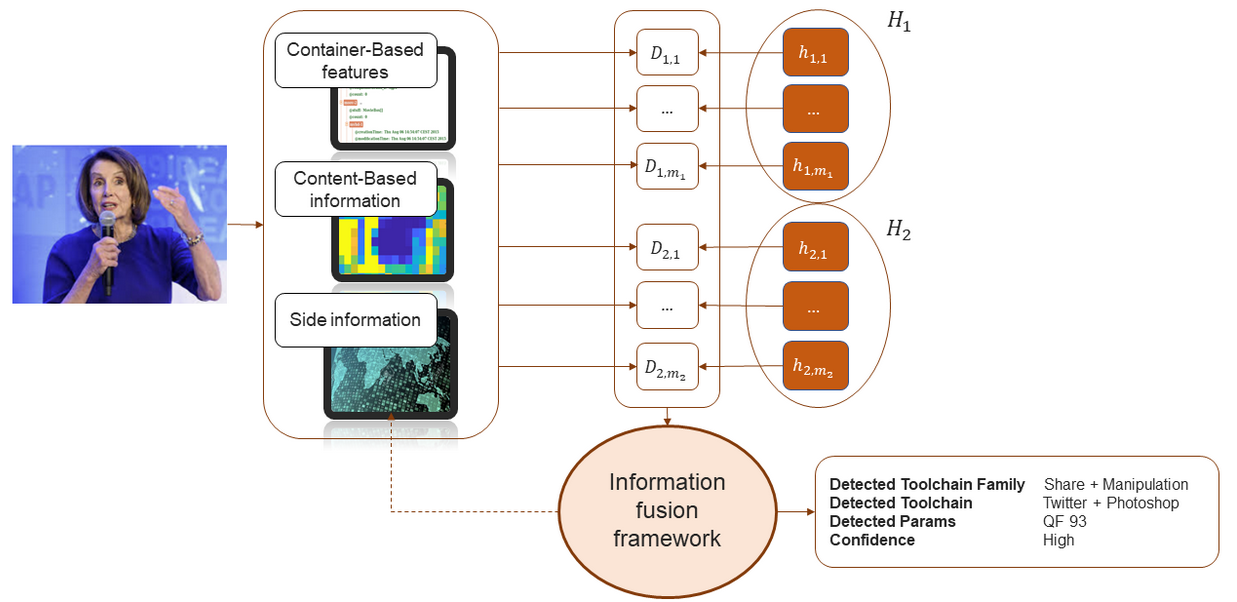

The UNCHAINED project aims at designing a reliable forensics analysis framework able to characterize signatures left by different toolchains on digital images and videos. The core technical challenge of UNCHAINED is to build an information fusion system encompassing content-based statistics, container-based characteristics and additional side information (when available), to probabilistically map the media object under analysis first into a toolchain family and then into a specific toolchain, built upon a scalable set of basic operations.

The UNCHAINED framework

The UNCHAINED project seeks to create a streamlined process for extracting signatures from images and videos, which can reveal their digital history. The information fusion system aims to efficiently adapt to new data and toolchains with minimal examples. The development of the detector and signature involves leveraging findings in multimedia forensics, utilizing various feature representations from existing literature that can be adjusted to suit reverse-engineering requirements.

For digital images, container-based features include extracting metadata, JPEG header, Huffman table, and quantization tables, which can characterize previous processing steps. Statistical analysis is applied to understand the impact of different toolchain families on these data, allowing for the differentiation of toolchain families. Content-based features for images include utilizing Discrete Cosine Transform (DCT) features and noise residuals. DCT coefficients and noise-like signals, obtained through denoising, prove effective for provenance studies.

For videos, container-based features involve analyzing file format structures to determine compatibility with native camera formats or specific processing, with a focus on unique alterations introduced by different toolchains. Content-based features for videos are explored through frame-wise techniques and spatio-temporal features. These features, such as Local Binary Patterns (LBP) and Local Derivative Patterns (LDP), provide compact representations of video sequences.

The resulting signature is an ordered list of mixed symbols, and the fusion framework faces technical challenges, including feature heterogeneity, feature variability, and data collection issues. Strategies to mitigate these challenges involve treating features from different domains separately, using customized voting mechanisms for fusion, and employing likelihood-ratio frameworks to identify discriminative symbols. Confidence measures for detectors and leveraging side information on data and toolchains further enhance the reliability of the framework.

Research Team

Principal investigator: Prof. Alessandro Piva

LESC Members

- Prof. Fabrizio Argenti

- Dr. Daniele Baracchi

- Dr. Massimo Iuliani

- Dr. Dasara Shullani

- Chiara Albisani

- Giulia Bertazzini

DINFO Members

- Prof. Andrew D. Bagdanov

- Dr. Matteo Lapucci

- Simone Magistri

Publications

- Massimo Iuliani, Marco Fontani, and Alessandro Piva, "A Leak in PRNU Based Source Identification - Questioning Fingerprint Uniqueness.", IEEE Access, 2021

- Dasara Shullani, Daniele Baracchi, Massimo Iuliani, and Alessandro Piva, "Social Network Identification of Laundered Videos Based on DCT Coefficient Analysis.", IEEE Signal Processing Letters, 2022

- Sebastiano Verde, Cecilia Pasquini, Federica Lago, Alessandro Goller, Francesco G. B. De Natale, Alessandro Piva, and Giulia Boato, "Multi-Clue Reconstruction of Sharing Chains for Social Media Images.", IEEE Transactions on Multimedia, 2023

- Simone Magistri, Daniele Baracchi, Dasara Shullani, Andrew D. Bagdanov, and Alessandro Piva, "Towards Continual Social Network Identification.", International Workshop on Biometrics and Forensics (IWBF), 2023

- Daniele Baracchi, Dasara Shullani, Massimo Iuliani, and Alessandro Piva, "FloreView: An Image and Video Dataset for Forensic Analysis.", IEEE Access, 2023